1.windows上下载安装sbt

去sbt官网下载 sbt包,解压到指定目录,不需要安装。记得配置环境变量。

新建 SBT_HOME ,值是sbt包的解压路径,比如C:\Users\***\Tools\sbt-0.13.15\sbt(建议不要放在C盘)

并在path 中添加 %SBT_HOME%\bin

查看是否成功,命令行输入: sbt sbtVersion

2.在intellij idea中安装Scala插件

File -->Settings-->Plugins-->Scala-->install

这样就不用再本机安装Scala了

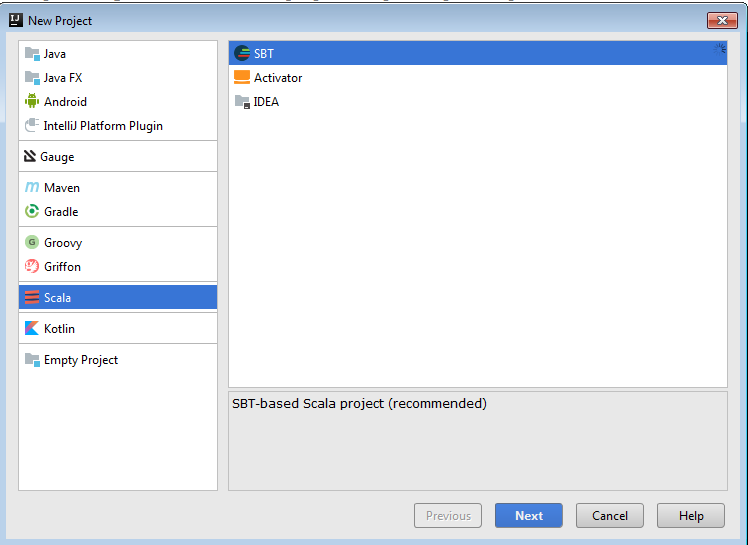

3.新建SBT project

File-->new-->project-->scala-->SBT

-->Next

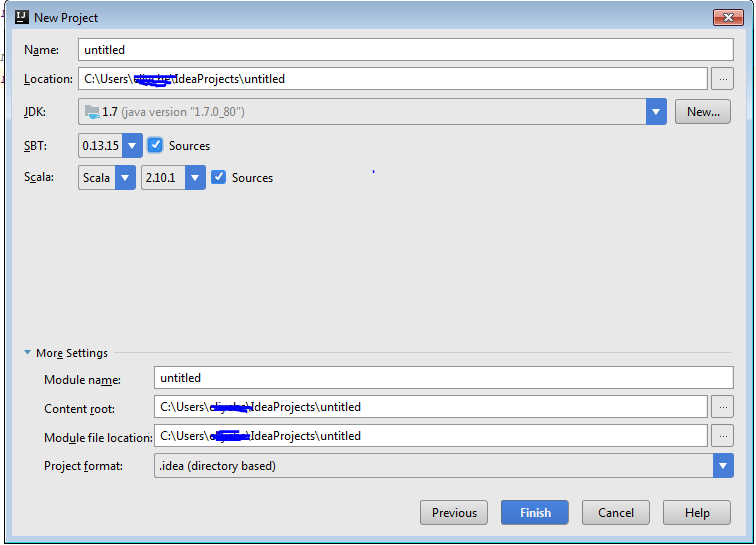

注意版本对应:

可以在spark官网上查看,例如 spark 1.6.0:

-->finish.

4.编辑build.sbt

name := "sparkTest" version := "1.0" scalaVersion := "2.10.1" libraryDependencies += "org.apache.spark" %% "spark-core" % "1.6.0" % "provided"

5.简单的word count 代码

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

object App {

def main(args:Array[String])={

val logFile = "file:///home/hadoop/cy/README.md" // Should be some file on your system

val conf = new SparkConf().setAppName("Simple Application")

val sc = new SparkContext(conf)

val logData = sc.textFile(logFile, 2).cache()

val numAs = logData.filter(line => line.contains("a")).count()

val numBs = logData.filter(line => line.contains("b")).count()

println("Lines with a: %s, Lines with b: %s".format(numAs, numBs))

}

}

5.打包运行

命令行中进入project所在目录下,执行sbt package ,生成的jar包会出现在: <project path>/target/scala-2.10/sparktest_2.10-1.0.jar 。将jar包传到装有spark 1.6.0的机器上,运行 ./bin/spark-submit sparktest_2.10-1.0.jar。

哈哈极简试水版,欢迎纠错指正~~~~~~

扫一扫在手机打开